[ad_1]

The event of robots that may perceive and observe spoken directions has plenty of potential in quite a lot of areas, and holds the promise of significantly enhancing human-machine interplay. One promising software is within the subject of house automation, the place voice-controlled robots can help with duties similar to controlling sensible home equipment, adjusting room temperatures, or managing family safety programs. By understanding pure language instructions, these robots can seamlessly combine into home settings, offering comfort and effectivity for customers, particularly these with mobility constraints or busy life.

In industrial and manufacturing environments, robots outfitted with pure language processing capabilities can streamline manufacturing processes and improve operational effectivity. Staff can situation verbal instructions to robots for duties similar to materials dealing with, meeting line operations, or high quality management inspections. This integration of spoken language directions allows a extra intuitive and versatile human-robot collaboration, optimizing productiveness and facilitating the execution of advanced manufacturing operations.

Though these robots have immense potential, the complexity of pure language comprehension stays a big problem. Deciphering the nuances and context of human speech requires subtle pure language processing algorithms that may discern the semantic that means behind verbal instructions. Moreover, it’s essential for robots to combine environmental notion to be able to perceive how spoken language pertains to their environment. This requires superior sensor applied sciences and notion capabilities to interpret the bodily context and spatial relationships, enabling robots to execute duties precisely and consistent with human expectations.

Researchers at MIT CSAIL wished to present robots the human-like means to know pure language and leverage that info to work together with real-world environments. Towards that aim, they developed a system known as F3RM (Characteristic Fields for Robotic Manipulation). F3RM is able to decoding open-ended language prompts, then utilizing three-dimensional options inferred from two-dimensional pictures, along with a imaginative and prescient basis mannequin, to find targets and perceive tips on how to work together with them. On this work, a six levels of freedom robotic arm was used to show the F3RM system.

The system first captures a set of fifty pictures, from quite a lot of angles, of the surroundings surrounding the robotic. These two-dimensional pictures are used to construct a neural radiance subject that gives a 360-degree three-dimensional illustration of the realm. Subsequent, the CLIP visible basis mannequin, which was skilled on tons of of thousands and thousands of pictures, is leveraged to create a characteristic subject that provides geometry and semantic info to the mannequin of the surroundings.

The ultimate step of the method entails coaching F3RM on information from a couple of examples of how the robotic can carry out a selected interplay. With that information, the robotic is able to take care of a consumer request. After the thing of curiosity within the request is positioned in three-dimensional house, F3RM will seek for a grasp that’s most definitely to reach greedy the thing. The algorithm additionally scores every potential resolution to ensure that the choice that’s most related to the consumer immediate is chosen. After verifying that no collisions shall be triggered, the plan is executed.

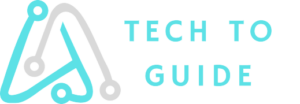

The group confirmed that F3RM is able to finding unknown objects that weren’t part of its coaching set, and likewise that the system can function at many ranges of linguistic element, simply distinguishing between, for instance, a cup stuffed with juice and a cup of espresso. Nevertheless, as it’s presently designed, F3RM is way too sluggish for real-time interactions. The method of capturing the pictures and calculating the three-dimensional characteristic map take a number of minutes. It’s hoped {that a} new technique will be developed that shall be able to performing on the similar stage with only a few pictures.F3RM allows robots to work together with the real-world through open-ended prompts (📷: W. Shen et al.)

An summary of the tactic (📷: W. Shen et al.)

[ad_2]