[ad_1]

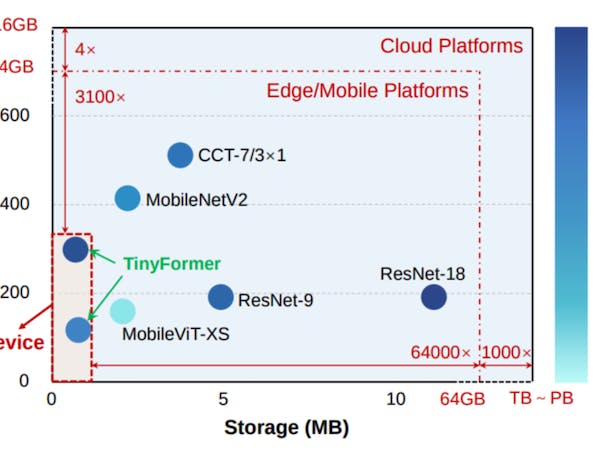

As IoT gadgets turn out to be extra frequent in a wide range of industries, the combination of tinyML strategies has opened up a variety of prospects for deploying machine studying fashions on low-power microcontrollers. Whereas current purposes similar to key phrase recognizing and anomaly detection have demonstrated the potential of tinyML in these constrained settings, deploying extra computationally intensive fashions stays a major problem that limits the general utility of those gadgets.

TinyML has an a variety of benefits which are tailor-made to the restrictions of resource-constrained {hardware}. By permitting machine studying algorithms to be applied on microcontrollers with restricted reminiscence and processing capabilities, tinyML allows real-time information evaluation and decision-making on the edge. This mix not solely improves the effectivity of information processing in IoT gadgets, but in addition minimizes latency, making certain that well timed and efficient insights are derived from the collected information.

Nonetheless, to make use of cutting-edge algorithms like transformer fashions, that are important in fields similar to pc imaginative and prescient, speech recognition, and pure language processing on microcontrollers, we should first tackle the prevailing technical challenges. A gaggle of researchers at Beihang College and the Chinese language College of Hong Kong have lately put forth a technique that allows transformer fashions to run on frequent microcontrollers. Referred to as TinyFormer , it’s a resource-efficient framework to design and deploy sparse transformer fashions.

TinyFormer consists of three distinct steps: SuperNAS, SparseNAS, and SparseEngine. SuperNAS is a instrument that routinely finds an acceptable supernet in a big search house. This supernet — which represents many attainable variations of the mannequin — permits for the environment friendly exploration and analysis of a variety of attainable architectures and hyperparameters inside a single framework. This supernet is then trimmed down by SparseNAS, which seeks to seek out sparse fashions with transformer constructions embedded inside it. On this step, sparse pruning is carried out on the convolutional and linear layers of the mannequin, after which an INT8 quantization is carried out on all layers. This compressed mannequin is then optimized and deployed to a goal microcontroller utilizing the SparseEngine part of the method.

To check this technique, ResNet-18, MobileNetV2, and MobileViT-XS fashions have been educated for picture classification utilizing the CIFAR-10 dataset. TinyFormer was then leveraged to compress and optimize the mannequin earlier than deploying it on an STM32F746 Arm Cortex-M7 microcontroller with 320 KB of reminiscence and 1 MB of cupboard space. A 731,000 parameter mannequin that required 942 KB of cupboard space was discovered to have a really spectacular common classification accuracy price of 96.1%.

The runtime efficiency of the system was additionally evaluated. Particularly, SparseEngine was in contrast with the favored CMSIS-NN library. SparseEngine was discovered to outperform CMSIS-NN by way of each inference latency and use of cupboard space. Acceleration of inference occasions was measured as being 5.3 to 12.2 occasions sooner. And as for cupboard space, reductions of 9% to 78% have been noticed utilizing SparseEngine.

Sustaining excessive ranges of accuracy whereas decreasing the computational workload of an algorithm is a tough job, however TinyFormer has been confirmed to do an admirable job. By sustaining a fragile steadiness between effectivity and efficiency, this technique seems poised to allow many new tinyML purposes within the close to future.TinyFormer shrinks transformer fashions for microcontrollers, but maintains accuracy (📷: J. Yang et al.)

An summary of the framework (📷: J. Yang et al.)

Deployment with SparseEngine (📷: J. Yang et al.)

[ad_2]